Since public cloud providers can offer enormous economies of scale and automation, they can manage at a good price, however, when scaling performance, your cost per hour fee will rise which results in higher operational expenses.

With adopting to the cloud, where you do not need to invest in hardware, software and other IT infrastructures, you rather go for a pay as you need service fee by shifting from a CAPEX spending on IT hardware and software to an OPEX third party leasing spending model. However, by just moving spending from capitalization with write offs over a period to monthly service costs does not necessarily mean that you are reducing your spending. The more complex the hybrid cloud environment is, the bigger the risk that OPEX costs could explode.

The spending predictability has become more complex and challenging. Costs need to be monitored and controlled at the whole hybrid environment, per location and globally consolidated. Focus is on monitoring bandwidth, networking and load capacity. Traffic or usage are the cost factors.

The vendor’s pricing schemes need to be translated and cost of services like getting data into the cloud and out of the cloud need to be fully understood before the Service Level Agreement is signed with the vendor. Prices vary where the data is run and stored (location based). As companies expand their cloud usage and leverage their locations infrastructure, it results in additional product and solutions which makes it more difficult to keep cost control.

With a consolidated cloud cost monitoring, companies are able to identify which parts of the company are the heavy spenders of cloud resources services and can initiate the necessary cost adjustments.

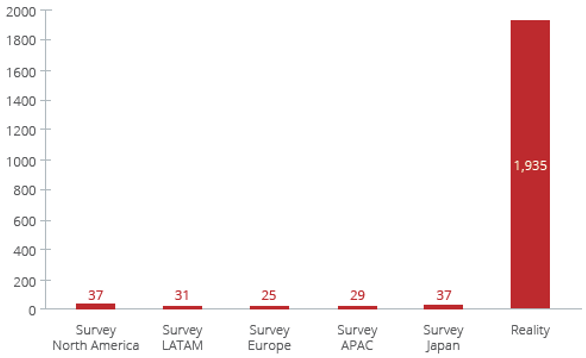

But not only that. Companies need to make sure that the services they subscribed are also used. In the Service Level Agreement, it is clearly listed for what the customer is using and paying. Companies have to make sure that they are actually using those services which they are paying for. Servers which are managed on premises can be easily switched off when not used. However, in the cloud, you do not manage servers anymore.

For example, if the IT department needs to use a virtual machine for a specific business requirement and once deployed to the production system, the data stays in the virtual machine and is not used anymore but the customer would pay for the services for this virtual machine not using. Companies need to ensure they update their policies with shutting down infrastructure not used in the hybrid cloud.

Cost risks appear when the workload moving to or from the hybrid cloud is not taking advantage of flexibility and elasticity. It is often cheaper to run stable and static workloads on premises instead in the cloud. Especially withdrawal data from the cloud to on premises applications can become expensive. In addition, moving data between different countries and locations can become expensive too.

Companies also need to compare “what-if” CAPEX scenarios against OPEX business cases. This means that you need to define an end of life service fee, calculate capital investments, maintenance and upgrade costs from your CAPEX spending and then coming up when the OPEX spending becomes higher than the CAPEX case. With this comparison, you may end or replace this service or move it back to your own on premises data centers.

Cloud technologies change rapidly with an enormous pace. Companies face a cost risk if they keep what they are doing without checking better and more cost effective solutions. Cloud providers come up with innovation quite often and companies should review their use of the cloud on a regular basis to check if there are new features or cloud services which will decrease service costs.

The number of cloud providers is also a cost factor. As easy as it is to onboard for example a new SaaS vendor, the more complex and expensive it gets to handle and integrate multiple vendors. The number of vendors should be small, however, it should not prevent companies to add a new vendor when this can solve a particular problem or business requirement.

Choosing a private cloud where you can use a dedicated cloud infrastructure, it has higher costs than using a public cloud. Whether you manage it yourself or hire a cloud service provider, and whether you host it in your data center or off-premises, you are still responsible for operating and maintaining your data center, hardware, software, security and compliance. Companies should ensure that cloud costs are transparent, knowing which services are used by whom and why, so that cost surprises get avoided. Believing that everything moving to the cloud is cheaper is an illusion.